KEYTAKEAWAYS

- GPT-4o, OpenAI's newest AI model, introduces voice and image recognition, enhancing natural interaction and understanding.

- With faster speeds and the ability to express emotions, GPT-4o delivers a more human-like and engaging user experience.

- The model's multimodal capabilities allow for integrated analysis of voice commands and visual information, expanding its potential applications.

CONTENT

Read about the features of GPT-4o, OpenAI’s most advanced AI model yet, offering voice and image recognition, faster speeds, emotional expression, and more.

INTRODUCTION

On May 13, OpenAI announced its latest AI language model, GPT-4o, which aims to make ChatGPT smarter and easier to use. This new model will be available to all users for free through ChatGPT, providing everyone access to OpenAI’s cutting-edge technology.

KEY FEATURES OF GPT-4O

Powerful Voice and Image Recognition:

GPT-4o possesses robust voice and image recognition capabilities, enabling it to directly handle voice commands and image analysis. With a ChatGPT app, it can act as a voice assistant on smartphones, using the phone’s camera and microphone to understand the world around it.

Faster Speed:

Compared to previous models, GPT-4o offers significantly improved processing speed. Its voice input response time is as short as 232 milliseconds, with an average of 320 milliseconds, approaching human conversational reaction times for quicker user responses.

Emotional Expression:

GPT-4o’s voice generation technology can exhibit emotions, adjust tones, and change speech rates, such as laughing during a conversation, singing, or speaking dramatically. It offers five different voices to choose from, enhancing the naturalness and realism of interactions, making users feel like the scenarios from “Her” are coming to life.

Multimodal Integration:

GPT-4o can handle voice commands and integrate image information for comprehensive analysis, providing richer feedback. For instance, when users take a photo and ask about an object, GPT-4o can give detailed explanations through voice.

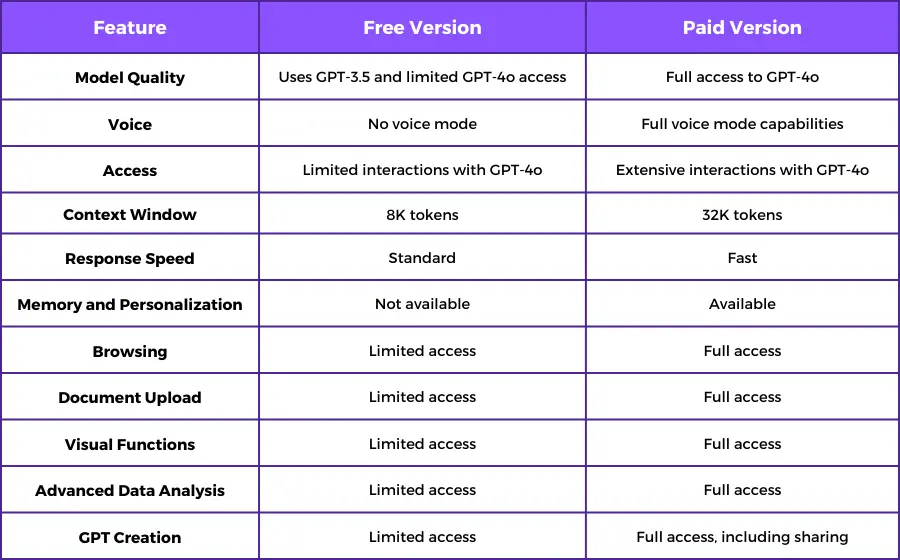

A Comparison of GPT-4o: Free vs. Paid

INDUSTRY CONTEXT AND COMPETITION

The release of the new version comes as OpenAI strives to stay ahead in the increasingly competitive AI arms race. Competitors, including Google and Meta, are building increasingly powerful large language models to power chatbots and integrate AI technology into various other products.

The OpenAI event was held a day before Google’s annual I/O developer conference, where Google is expected to announce updates to its Gemini AI model.

OpenAI’s update also precedes Apple’s expected AI announcements at its Worldwide Developers Conference next month, which may include new ways to integrate AI into the next iPhone or iOS version.

Meanwhile, the latest GPT version could benefit Microsoft, which has invested billions of dollars into OpenAI, embedding its AI technology into Microsoft’s own products.

>>> Read more: AI Stocks: 10 AI Companies to Invest In 2024

USER EXPERIENCE ENHANCEMENTS

OpenAI executives demonstrated voice interactions with ChatGPT for real-time explanations of math problems, bedtime stories, and coding advice. ChatGPT can speak in natural, human-like voices as well as robotic tones, and can even sing parts of its responses. The tool can also analyze chart images and engage in discussions about them.

ChatGPT can now automatically translate and respond in multiple languages, with OpenAI claiming support for over 50 languages. “The new voice (and video) modes are the best computer interface I’ve ever used,” said OpenAI CEO Sam Altman in a blog post announcing the news.

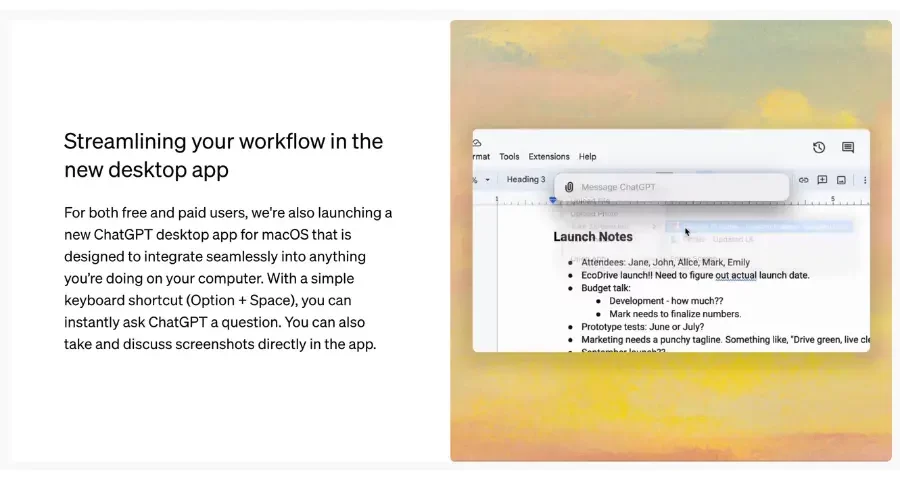

Murati mentioned that OpenAI is rolling out the ChatGPT desktop app, allowing users to have voice conversations directly from their computer. This starts with Voice Mode and will later include GPT-4o’s new audio and video capabilities. Initially available to Plus users on macOS, it will be more broadly available in the coming weeks, with a Windows version planned for later this year.

(source: OpenAI)

GPT-4o will also be available to developers looking to build custom chatbots from OpenAI’s GPT store, a feature now open to non-paying users as well. Free ChatGPT users will have limited interactions with the new GPT-4o model before the tool automatically reverts to the older GPT-3.5 model; paid users will have more extensive access to the latest model.

IN SUMMARY

The new GPT-4o is a big step forward for AI, making it easier to use and more natural. OpenAI is leading the way, even with competition from Google, Meta, and Apple. We’ve covered GPT-4o’s basics here. Next time, CoinRank will show you how different ChatGPT versions respond to the same questions. Stay tuned to learn more!

>>> Read more: 2024’s Top 5 Tech Trends: What You Need to Know

Looking for the latest scoop and cool insights from CoinRank? Hit up our Twitter and stay in the loop with all our fresh stories!